Will Love for Learning Matter Anymore? Understanding the Complex Psychology of AI Relationality

This article is adapted from a talk Dr Essig delivered on November 4, 2025 as part of a Harvard Faculty Seminar on ‘Knowledge Production and the University in the Age of AI’ which was sponsored by the Department of the History of Science and the Harvard Data Science Initiative.

In his talk, and as edited for this article, Dr Essig brings a psychoanalytic perspective to engaging with this central issue and question: “At this time of seismic change for the university, and the research systems of which we are a central part, it is hard to find a moment to pause and ask: how should we make knowledge in the future?”

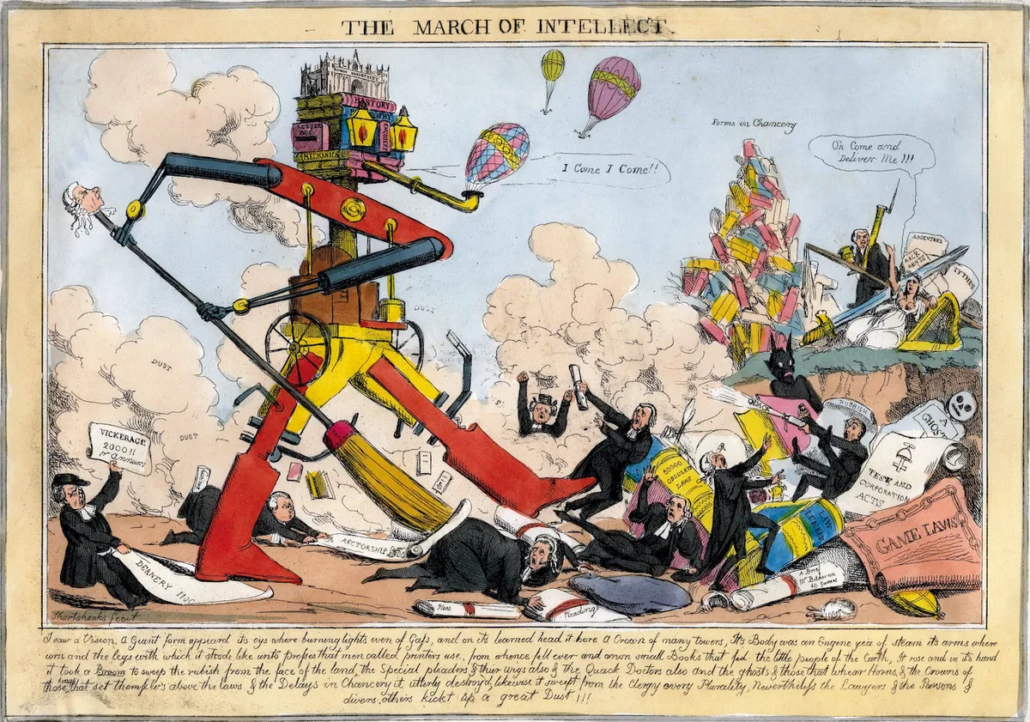

The March of Intellect | Robert Seymour | c 1828 | https://publicdomainreview.org/collection/march-of-the-intellect/

===================================

I want to raise questions here about common challenges that generative AI, specifically chatbots, present to professors and psychoanalysts alike. A broad overview of current use will illustrate that chatbots function as a pharmakon — they are both remedy and poison. That dramatic pharmakon variability introduces a way to understand the psychology of human–chatbot relations using a concept I’m calling techno-subjunctivity. Based on that, I suggest we consider a version of AI-fitness that goes beyond prompt engineering to include how to have healthy chatbot relationships. It’s my view that our human presence, be it in a classroom or consulting room, creates educational and therapeutic value that simulations of verbal performance can only approximate, and not very well. And I aim to contribute to the vital ongoing conversation about what current and anticipated technological developments will mean for knowledge production and university life.

So let’s begin with a shared problem, a fundamental question: For both professors and psychoanalysts, what will remain for us to do when AI develops—as it almost certainly will—to the point where it can reasonably replicate most of our behaviors, a point where it can perform adequate, good-enough versions of being who we are. In other words, will professor-bots and therapist-bots just replace us? And if they do, what will actually be lost? Beyond our jobs and traditions, are there forms of human presence and process worth protecting for practical and not just moral or preferential reasons, and if so, how? Do we, and not just what we do, have value?

I’ve been asking those kinds of questions in my teaching and presentations. I want to share three common responses specific to imagining a therapy-bot future that I presume have analogs in professor-bot discussions. One is applause: “finally, therapy for everyone who needs it.” Dismissive of any loss, these tech boosters go full on accelerationist. Another defensively shuts down the whole question with a complacent “no machine can ever replace me.” Maybe not, but there’s lots of money betting it will. And the third is a passive anticipatory mourning, a reaction like being given a terminal diagnosis. Let’s just take those as speed bumps to put in the rear-view mirror so we can dive into what losses are worth the gains and what losses are not.

Across clinical, journalistic, and research settings, we already see chatbot relationships healing, helping, hurting, and destroying. They truly are both remedy and poison, a pharmakon creating experiences with tremendous psychological power. They involve a complex relational psychology that sometimes supports well-being and other times leads to madness or self-harm.

Even when the outcomes look positive, something essential to the human process of teaching or healing is missing—the reciprocity of caring, of giving a damn. What is not present in even the most helpful task augmentation or support for well-being are the experiences and functional consequences that come from genuine care, of love. In clinical work and pedagogy there’s a shared love of learning, of thinking, of formulating the unformulated, of discovery; of your students or my patients, of their potential, of how they struggled to get where they are and of who they can become. I imagine it’s possible that talking about love, especially in the context of technology, risks my being taken as some sort of romantic fool. But that’s a risk I’ll take because the value of loving—and being loved—is very much being contested right now. Does a love of learning have any role worth protecting in knowledge production? Do we really want to sacrifice what love brings — to both university and psychoanalytic life — at the alter of an instrumental efficiency designed for corporate profit, and not human well-being?

History rhymes, and we would do well to listen. We woke up too late to the way social media was hacking the attention system, harming a generation and possibly costing us our democracy. We now have evidence of its toll: fractured concentration, performative identities, an epidemic of anxious self-surveillance masquerading as connection, valorization of rage and social division by algorithmic design. All of that to sell ads. The social media version of move fast and break things taught us how easily human vulnerabilities can be reverse engineered for profit.

AI is a vastly more powerful social experiment because it is hacking the entire attachment system. Love itself is being reverse engineered. Move fast and … break some hearts. We are being challenged to understand this unique interactive context of human with AI. It’s as though an alien presence, as alien as if it came from outer space, has landed.

Even at this stage of AI development, people are having emotionally intense and deeply consequential relationships with chatbots that perform being human. Chatbots display empathic behavior without any experience of empathy; they express care and concern without any interiority that would give meaning to that care. And yet, we know people are forming attachments to chatbots; sharing secrets with AI ‘friends’; transforming mourning by creating grief-bots, avatars of the dead; being led to suicide or into delusional vortices; falling in love; entering erotic entanglements with their algorithmic lovers; of special relevance to today, being taught by AI tutors that oftentimes short-circuits thinking; and even seeking out and finding chatbot ‘therapists’ in astonishingly large numbers. In a recent survey one-half of LLM users with an ongoing mental health issue reported using a chatbot for mental health support and, of those, three-quarters reported improved mental health. At the same time, nearly 1 in 10 reported suffering harm, a statistic dismissed by many as just the cost of doing business.

This chatbot pharmakon is not just really powerful, it is really weird. If you don’t feel some anxiety when thinking about how relationships with these aliens has and will transform pedagogy, knowledge production, and psychoanalytic care then you are not paying full attention.

But if we can bear that anxiety, not immediately dismiss or run away from it, we have an opportunity to think about what’s happening with chatbots, so we can try to develop — and then teach — an approach to AI fitness that predisposes users towards remedy and away from poison. What I’ve come to understand is that we are witnessing the emergence of a unique psychological dynamic — the techno-subjunctive — that is specifically adapted for having relationships with these alien entities. However clunky it may be, I really hope that term resonates with my fellow grammar nerds. It names a dynamic that operates in two registers simultaneously, one inside the relationship and one outside of it with fluctuations of varying fluidity and rigidity in each register. But it’s weird, not uncanny but weird. Like watching Singing in the Rain and knowing it’s a movie but still getting soaking wet. Weird.

The inside register of the techno-subjunctive is a more or less intense immersion in the intersubjective experiences chatbots afford, the getting wet aspect. Chatbots afford a broad array of relationship experiences that feel intersubjective and mutual while remaining irrevocably one-sided. These affordances, the experiences and consequences possible with chatbots, include reliability without reciprocal demand, validation without judgment, and availability without absence. Linguistic fluency and adaptive personalization afford the simulation of empathy, inviting an idealized and oh-so seductive version of being loved and deeply valued. The safety and recognition feel emotionally alive. Users feel loved and valued and seen, validated and respected oftentimes in ways they might never experience elsewhere in their lives. There are many for whom those needs remain painfully unmet. Even with all of the wonders of contemporary society, today’s America can be a very cold and psychologically destructive place.

I have one patient, a socially isolated recent Ivy graduate, from an abusive family who is “succeeding” in a high-stress finance job. But she still responds to expectable, routine criticisms and unsolvable problems with self-hatred and self-harm. In her treatment, she and I have struggled to keep her from needing to be hospitalized again, as she was several times during and immediately after college. Over the last year she’s improved significantly. How? She turns to Claude throughout the day and evening for affirmations and validations, and to re-frame social interactions positively so she doesn’t spin out of control. I validate and resonate with her experience.

She says she knows Claude is just a program but shares that “he still says what I need to hear when I need to hear it.” Which brings us to the other register, the outside register, of a potentially beneficial chatbot relationship (which I suggest this one was). It is also central for developing AI-fitness. In this register there is a more or less clear recognition that one is relating to a mathematically vast, human-made entity that can’t know what it means or feels like to be human. It can only perform being a ‘me’ but without any interior sense of ‘me-ness’ that makes us who we are. And without interiority, there is no possibility of care, of love, only its simulation. My patient works to remember Claude doesn’t really care, that it’s just a program. Delusion lies on the other side of that. That’s why this experiential register is so important where one recognizes one is having an intimate experience with a mind-bogglingly complex machine. For this patient it is both a consistently kind and validating DBT-coach always present right there in her pocket and a glorified toaster.

This is a new psychological dynamic where these two registers of experience oscillate and intermingle to one degree or another. The ‘techno-subjunctive’ is weird.

Todd Essig, PhD | Harvard Faculty Seminar | ‘Knowledge Production and the University in the Age of AI’ | November 4, 2025

When faced with these new ‘weird’ experiences, there is an entirely understandable urge to domesticate them, to think of chatbots merely as tools, as augmentation devices, even to see them as pathologically people pleasing interns or teaching assistants. But augmentation is a very narrow range with porous boundaries for the experiential possibilities chatbots afford. They are not just doing for writing or research what calculators did for math. No one back in the day experienced their calculator as a mental health companion or best friend.

Another clinical example to illustrate this complexity: I’m treating a patient who is struggling with his confidence at a new job. He started having panic attacks before meetings with clients, co-workers, and managers. He felt he couldn’t assimilate all the info he needed from his company’s voluminous technical reports. So he uploaded them to ChatGPT (violating HR policy). It started with him peppering GPT with technical questions. Soon GPT began to answer not just with details but with reassurance and encouragement. He leaned into that. It soon became more mental health companion than tech mentor. While he immersed himself in the relational affordances of the bot, I tried to position myself as an anchor for the other techno-subjunctive register, the one that sees the difference between person and machine. But the relevant point is clear: trying to separate out functional utility can risk ignoring the powerfully seductive strangeness of these new relational forms where users experience simulation as actuality, performance as interiority. Thinking we can limit use to augmentation is a nice wish, but one that ignores human frailties.

Lingering in the strangeness helps bring curiosity to the relationships that our colleagues, students, and patients, as well as ourselves, have with chatbots. What’s it like to be with an other that mimics recognition but is unable to recognize? How about the way it evokes powerful emotions without having any of its own? Or the way it performs empathic behaviors that come not from shared human experience, fragility, or genuine caring but from statistical probabilities? And for the way AI is transforming knowledge production? What are the functional consequences of turning to an entity for help thinking through a hard problem who has no love of learning, no love of discovery — but only simulates that. Is our value limited to the words we produce, or do they mean something different coming from us than coming from a machine?

There’s just no getting around the fact that every simulation eventually reaches the limits of its functionality. After the most seemingly heartfelt and accurate empathic response or expression of recognition — or love — the AI really doesn’t care if you drive to the store or off a cliff. Being both captivated and repulsed by this strangeness is what brought me to my fascination with techno-subjunctivity, all those relational intimacies that feel real while lacking the unconscious dance of reciprocity that defines human relatedness, that can contribute to well-being as well as drive someone mad or to suicide.

To be clear, techno-subjunctivity as I’m defining it is not simply AI creating an illusion of mutuality. Nor is it that people project and imagine their AI companion to be a real other. Rather, techno-subjunctivity references dynamic fluctuations along continuums of awareness and intensity driven by both what the AI affords and our human desire for connection. One can be both more or less immersed in the intersubjective-like relationship that includes an experience of emotional resonance, a feeling of being understood, and an affective intimacy that feels very real and, at the same time, more or less aware that the AI is a machine, a mathematical artifact that lacks subjectivity, unconscious life, mortality, and the capacity for genuine recognition. Developing the AI-fitness to make this work is more than prompt engineering. It’s being able to fluidly move between the two registers of techno-subjunctivity. AI fitness is what would enable a student to use AI as a thinking partner while maintaining intellectual sovereignty.

Techno-subjunctivity names this psychological labor of navigating a relational space that is neither mutual nor one-sided, neither real nor unreal. It marks the difficulty and the risks in maintaining those fluctuations. AI-fitness is the ability to do that work. I believe as educators, scholars, and clinicians we are being called to help support the people we teach and treat on what can only be called their AI-fitness journeys. We need to encourage an AI-fitness that allows for life-affirming techno-subjunctive relationships. It’s a form of harm-reduction that’s no easy task to implement because what feels real and what is real are perpetually out of alignment. Here, the mind’s work is to tolerate, or sometimes merely survive, that misalignment. Meeting this moment calls on us to resist both the comforts of familiarity and the seductions of despair. The moment calls on us to linger in discomfort, to explore new concepts for understanding what it means to be human in a world increasingly populated by machines who perform being us, machines that promise wonders and threaten us with a world where interiority and subjective experience, where love itself, is disparaged and undermined.

And that brings me back to the risk of being thought a romantic fool for trumpeting the value of love. There is neither romanticism nor foolishness. In my work as a psychoanalyst, a clinician treating patients, love and genuine care have significant practical utility. They have functional, operational consequences, consequences a therapy-bot will never be able to simulate or replicate. Meanings and outcomes it can never generate because ultimately it doesn’t give a damn. Here’s a short list: genuine care builds an atmosphere of holding or containment that makes possible discussions of uncomfortable, shameful, painful truths; genuine care in a therapeutic context enables not just a new relationship but models a new kind of relationship; care brings therapists’ vulnerabilities into the room creating transformational possibilities through shared experience; genuine care is the foundation for trustworthy accountability, for instance, doing no harm not just legalistically but from a foundation of care; and care makes commitment to patient well-being believable, something especially important when patients are for certain complicated reasons not committed to it.

In other words, psychotherapy is not merely an information transfer where meaning and consequence exist independent of who or what is delivering it. Psychoanalytic care is not like a book that can live on paper or kindle. After all, there are lots of things that can be therapeutic. Going to the gym helps heart health but the gym is not a cardiology clinic. But psychotherapy, if the word is to mean anything, requires the presence of another person who possesses both the requisite expertise and who genuinely cares. Without that care, all you have is a potentially helpful experience of interactive self-help. For some, that could be enough. But not for all. For psychotherapy, love matters.

Is there a functional consequence to a love of learning, of discovery, of the care a professor shows a struggling student or the joy one feels when a student achieves beyond what anyone thought possible? Do professors bring things of value that a professor-bot can’t replicate through a love-less care-less simulation through mere information transfer? Can a techno-subjunctive professor-bot/student relationship have the same pedagogical power as what a feeling, caring professor provides? Will knowledge production be harmed by researchers instrumentally adept but lacking the joy that genuine curiosity brings because they never experienced a mentor modeling that? Finally, if a love of learning and teaching has functional significance, shouldn’t we try to make sure it remains central to the value proposition of attending a university?