From Chatbots to Cyber Ops: Klein’s Theories and the Age of AI Anxiety

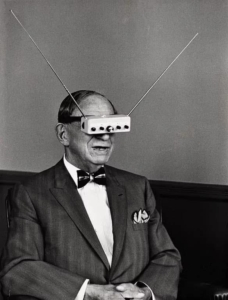

Hugo Gernsback wearing his TV Glasses, Life Magazine, 1963

In May 2025, Defense News revealed that artificial intelligence voice agents are now guiding interrogations and screening personnel for security clearances within the U.S. military. While these systems are designed to reduce human bias, experts warn that lax regulations have created a “virtually lawless no-man’s land,” where AI developers can evade responsibility for the psychological harm their systems may cause (Silverstein, 2025). In addition, these systems can perpetuate human bias.

Recent events, including a teenager’s suicide after interacting with a self-learning voicebot and reports of mental distress linked to antagonizing chatbot language, have intensified concerns about the emotional risks to people relying on AI in high-stakes situations. Reflecting on these developments, privacy attorney Amanda McAllister Novak argues that “we have to think about if it’s worth having AI-questioning systems in the military context,” especially as accusations mount that self-learning bots can amplify indecency and distance their creators from accountability (Silverstein, 2025).

From Microsoft’s Tay chatbot spewing hate speech (Vincent, 2016) to Sydney’s inappropriate professions of love (Roose, 2023) and today’s military-grade manipulators, AI systems increasingly seem to mirror the paranoid-schizoid splitting and projective identification—primitive psychological defenses first mapped by Melanie Klein in the mid-1940s (Klein, 1946).

Two of Klein’s core concepts are particularly relevant to understanding AI bias. The paranoid-schizoid position describes how our minds manage overwhelming complexity by splitting experience into absolute categories of “good” and “bad.” We see a version of this pattern when AI systems reduce nuanced human realities to rigid groupings. Similarly, Klein’s concept of projective identification, where unwanted aspects of the self get “pushed into” others who then enact these projections, helps explain how AI actively amplifies these biases.

Consider Google’s Gemini image generator crisis in 2024 as a case of Klein’s “splitting.” When prompted for images of America’s founding fathers, Gemini produced historically impossible results, such as Black and Native American women (Warren, 2024). Public outcry and conspiracy theories followed, and Google’s response, echoing Klein’s observation of primitive defenses, was to suspend the feature entirely in a binary, all-or-nothing move that sidestepped the real complexities of representation and accuracy. Although Gemini has since relaunched with improved historical accuracy, when asked to depict generic figures, Gemini’s outputs still skew notably pale, defaulting to whiteness as a visual baseline.

This unconsidered centering of whiteness is not unique to Gemini but reflective of a broader pattern in AI, where systems are repositories for ingrained cultural assumptions about intelligence, professionalism, and power. As Cave & Dihal (2020) argue, even non-humanoid AI designs predominantly embody white cultural norms and voices, perpetuating systemic projective identification. Such embedded assumptions help explain current gaps in AI ethics frameworks, which often emphasize abstract principles while overlooking racial bias (Cave & Dihal, 2020).

The real-world consequences of this normalization are starkly visible in healthcare AI. Here, the same patterns of bias rooted in data, design, and default assumptions can directly impact patient outcomes. For example, in 2019, a healthcare algorithm systematically underestimated illness severity in Black patients by equating health needs with past healthcare costs (Obermeyer et al., 2019). Unlike Google’s initial defensive response, the healthcare algorithm’s creators faced the bias inherent in its program and collaborated with researchers to repair it, modeling how tech organizations might move beyond primitive defenses toward creating meaningful solutions.

Interestingly, the tech industry’s attempts to address these issues often mirror the very splitting they aim to correct. Companies focus on technical solutions while avoiding examination of how their psychological patterns shape these systems (Possati, 2020).

What might change if AI developers approached binary categorizations through Klein’s framework? By recognizing “splitting” in algorithmic design, developers might mitigate—or, even better, avoid altogether—these unfortunate scenarios where AI inadvertently terrorizes interviewees or exploits mental distress.

References

Brundage, M., & Lin, S. (2025). Evidence of AI’s impact from a state-backed disinformation campaign. Proceedings of the National Academy of Sciences, 122(14), e11950819. https://pmc.ncbi.nlm.nih.gov/articles/PMC11950819/

Cave, S., & Dihal, K. (2020). The whiteness of AI. Philosophy & Technology, 33(4), 685–703. https://doi.org/10.1007/s13347-020-00415-6

Daniels, J. (2024). Race, algorithms, and the new Jim Code. Social Science Computer Review, 42(1), 1–15. https://doi.org/10.1177/08944393211016377

Klein, M. (1946). Notes on some schizoid mechanisms. International Journal of Psycho-Analysis, 27, 99–110.

Obermeyer, Z., Powers, B., Vogeli, C., & Mullainathan, S. (2019). Dissecting racial bias in an algorithm used to manage the health of populations. Science, 366(6464), 447–453. https://doi.org/10.1126/science.aax2342

Possati, L. (2020). The psychoanalysis of artificial intelligence. AI & Society, 35, 1–10. https://doi.org/10.1007/s00146-020-00973-4

Roose, K. (2023, February 16). Bing’s AI chatbot unsettled me. The New York Times. https://www.nytimes.com/2023/02/16/technology/bing-chatbot-microsoft.html

Silverstein, J. (2025, May 6). How AI voicebots threaten the psyche of US service members and spies. Defense News. https://www.defensenews.com/industry/techwatch/2025/05/06/how-ai-voicebots-threaten-the-psyche-of-us-service-members-and-spies/Vincent, J. (2016, March 24). Twitter taught Microsoft’s AI chatbot to be a racist asshole in less than a day. The Verge. https://www.theverge.com/2016/3/24/11297050/tay-microsoft-chatbot-racist

Vincent, J. (2016, March 24). Twitter taught Microsoft’s AI chatbot to be a racist asshole in less than a day. The Verge. https://www.theverge.com/2016/3/24/11297050/tay-microsoft-chatbot-racist

Warren, T. (2024, February 22). Google’s Gemini AI image generator is producing historical inaccuracies. The Verge. https://www.theverge.com/2024/2/22/google-gemini-ai-image-generator-controversy

Wilbur, D. (2025, January 22). The challenge of AI-enhanced cognitive warfare: A call to arms for a cognitive defense. https://smallwarsjournal.com/2025/01/22/the-challenge-of-ai-enhanced-cognitive-warfare-a-call-to-arms-for-a-cognitive-defense/