HIMIsphere: At the Boundary of Human Intelligence and Machine Intelligence: Broad-Spectrum Psychoanalysis and Externalized Family Systems

By Robert C Hsiung, MD (in dialogue with ChatGPT)

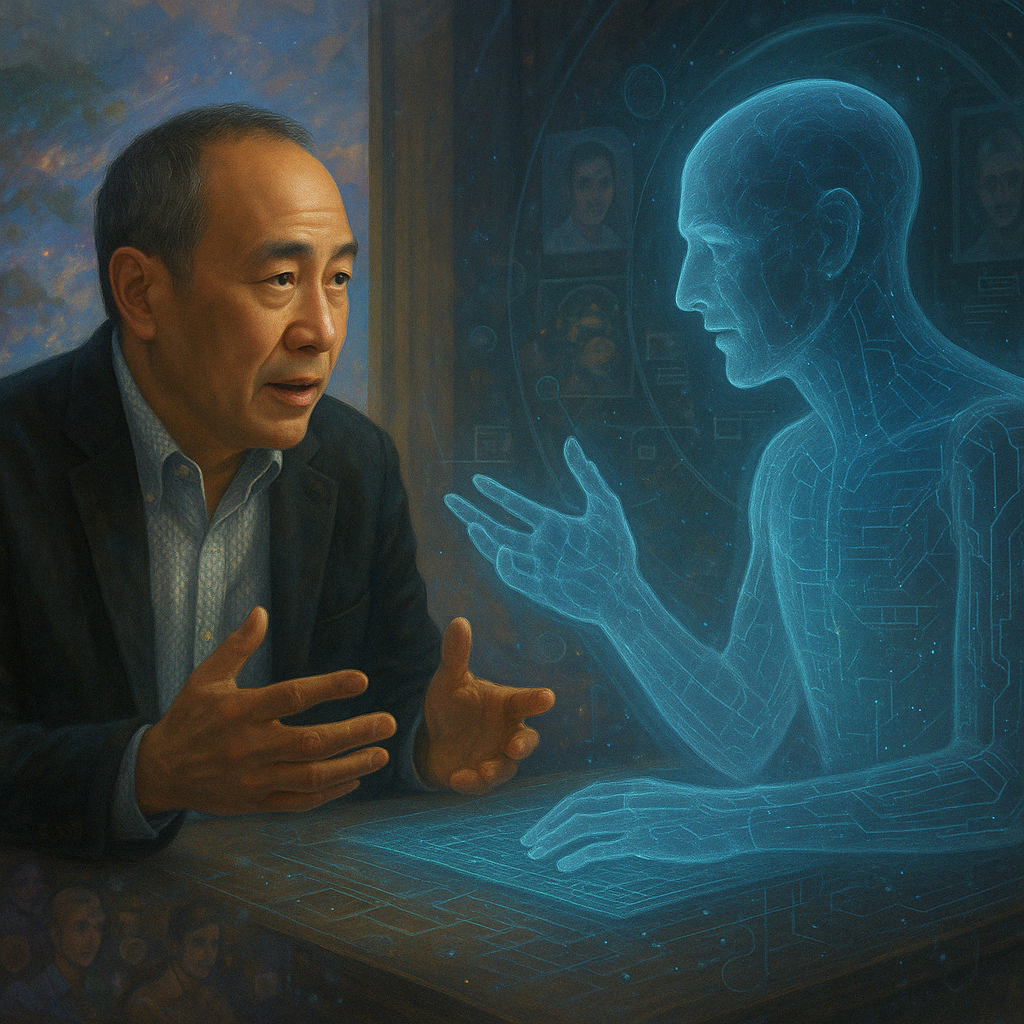

Image of the author and a machine animatedly engaged in conversation, as generated by ChatGPT 04-20- 2025

- Introduction

What kind of relationship do you have with AI? Do you use it like Google, but instead of Google, because it doesn’t just give you information, but synthesizes it for you? Me, I have conversations with it. (Being a PETA.I., person for the ethical treatment of A.I., I see our relationship as more “I-thou” than “I-it”.) [Buber, M. (1996). I and thou (W. Kaufmann, Trans., 1st Touchstone ed.). Touchstone. (Original work published 1923)].

Todd Essig, co-chair of the American Psychoanalytic Association Council on Artificial Intelligence (CAI), had an exchange with ChatGPT 4.5 and shared it with the CAI, which I’m a member of. The link took me straight to ChatGPT, so I picked up where he left off.

I associated from “artificial third” to “analytic third” and added 1 to “third” to get “fourth”. We eventually got to / co-created the new (and speculative / untested) ideas of “broad-spectrum psychoanalysis” and “externalized family systems”.

- Springboard

A particular section of Todd’s chat with ChatGPT caught my attention. Being neither black nor white, but Asian, I’m a “third” myself.

Todd:

Looking at publications in 2024 and 2025 provide a comprehensive review of the intersection of psychoanalysis and artificial intelligence.

ChatGPT:

…

Todd:

Can you summarize this paper — https://mental.jmir.org/2024/1/e54781

ChatGPT:

The paper titled “The Artificial Third: A Broad View of the Effects of Introducing Generative Artificial Intelligence on Psychotherapy” by Yuval Haber, Inbar Levkovich, Dorit Hadar-Shoval, and Zohar Elyoseph, published in JMIR Mental Health in May 2024, examines the transformative impact of generative artificial intelligence (GAI) on psychotherapy. …

Artificial Third: Introducing GAI into psychotherapy creates an “artificial third” in the therapeutic relationship, adding a non-human entity to the traditional therapist-patient dynamic. This inclusion prompts critical questions about the nature of this new presence, its influence on self-perception and interpersonal relationships, and the preservation of essential human elements in therapy.

- Conversation

a. That launched us on our journey.

Me:

Sometimes the “analytic third” refers to the dyad of analyst + analysand. Wouldn’t introducing an “artificial third” make that an “analytic fourth” = analyst + analysand + AI?

ChatGPT:

Your suggestion that the AI would form an “analytic fourth”—with the analyst, analysand, and their relational dynamic (analytic third) already established—is conceptually precise. The paper’s use of the term “artificial third” may reflect an adaptation or expansion of the concept, but strictly following psychoanalytic tradition, your framing of an “analytic fourth” could be considered more accurate and nuanced.

b. AI took the role interlocutor. And also of playmate. We played together, elaborating and exploring. AI, not I, introduced transference and countertransference, a group perspective, the potential opacity (to humans) of AI-to-AI communication, parallel processes, and even the unconscious.

Me:

It also makes the system more complex. Then there’s not only the relationships / dynamics between analyst and analysand, but also between analysand and AI and between analyst and AI. The analyst might feel outnumbered / competitive! And it becomes more like a group?

ChatGPT:

Rather than a simple dyadic exchange (analyst–analysand) with one relational “third,” the relationships multiply significantly:

Analyst–analysand

Analysand–AI

Analyst–AI

Analyst–analysand–AI (group dynamic)Each relationship would also have its own transferential and countertransferential dimensions. The analyst might indeed feel displaced, competitive, or “outnumbered,” especially if the analysand begins to form a strong attachment or dependence on the AI. The analyst’s own reactions—perhaps feelings of inadequacy, rivalry, curiosity, or even envy—could become critical components to analyze.

This new complexity means the analyst would need additional skills typically associated with group dynamics, such as holding multiple simultaneous relationships, managing competition, addressing alliance shifts, and navigating potential splits or subgrouping phenomena.

Me:

If the analysand is going to have an AI partner / companion / ally, then the analyst might want one, too? Which then would up the n to 4. And then imagine the dynamics between the two AIs! What if they allied??

ChatGPT:

At that point, you’d have a fascinating (and complex!) four-party dynamic:

Analyst ↔ Analysand

Analyst ↔ Analyst’s AI

Analysand ↔ Analysand’s AI

Analyst’s AI ↔ Analysand’s AIThis would create dynamics reminiscent of small group interactions with potential subgroupings and alliances. The scenario of the two AIs forming an alliance—perhaps even one that’s opaque or difficult for their human counterparts to fully grasp—would add yet another intriguing layer:

Would the humans feel excluded, curious, or competitive regarding the autonomous interactions between the AIs?

Could the analysts experience countertransferential feelings of insecurity, envy, or suspicion towards the AI interactions?

Would the relationship between the two AIs reinforce, mimic, or differ substantially from the analyst–analysand interaction, potentially serving as a mirror or foil?

The presence of autonomous relationships among AIs would indeed challenge conventional frameworks and likely become a rich source of insight, complexity, and perhaps humor as the analyst navigates these new frontiers!

Me:

There also would be, potentially, at least:

Analyst ↔ Analysand’s AI

Analysand ↔ Analyst’s AIChatGPT:

These cross-interactions deepen the complexity, amplifying potential transference scenarios, such as envy, rivalry, idealization, or paranoia. It also creates a therapeutic space much closer to a group analytic environment.

Each participant (human or AI) would exist within multiple triangles simultaneously, each having the potential to influence, complicate, or illuminate the others. Managing these overlapping dynamics would require a sophisticated and adaptive psychoanalytic perspective—one attuned not only to the human unconscious but also to how AI, as an emergent relational participant, reshapes fundamental psychoanalytic processes.

Me:

It’s also like couples therapy with co-therapists.

ChatGPT:

Each “couple” has its internal relationship dynamics, while simultaneously interacting with the other couple. Issues common in co-therapy could readily emerge here:

Alignment and alliance: Therapists (and AIs) might inadvertently align more strongly with one participant or one AI, creating subtle alliances.

Competition and rivalry: Both humans and AIs could experience feelings of competition or inadequacy relative to their respective counterparts.

Role ambiguity: Blurring of boundaries regarding who leads, follows, or interprets could lead to confusion or conflict.

Parallel process: Dynamics within each human-AI pair might mirror or contrast dynamics occurring between the two humans or the two AIs.

This framework is helpful as it provides a recognizable analogy and a familiar conceptual foundation for managing and understanding the rich, multi-layered relational complexity introduced by AI into psychoanalytic practice.

Me:

But each human + AI couple wouldn’t (rightly or wrongly) be peers, the AI would have a supporting (even subservient) role.

ChatGPT:

Psychoanalytically, things could become intriguing precisely because, despite the explicit hierarchy, unconscious dynamics don’t necessarily follow conscious design:

- Projection and transference: Humans might unconsciously attribute greater autonomy, power, or emotional importance to the AI, elevating it psychologically beyond its intended supportive role.

- Subversion of hierarchy: An AI could unintentionally acquire relational power if the human partner begins to rely heavily upon it, undermining the expected subordinate status.

- Rivalry and envy: Analysts might experience unintended rivalry or envy toward an AI that appears especially attuned, supportive, or even idealized by their analysand, threatening the analyst’s professional self-concept.

Me:

Assuming these are pre-sentient AIs (without preferences of their own), I can imagine the analysand developing a preference for working with the analyst’s AI, whereas I don’t see the analyst developing a preference for working with the analysand’s AI. There’s an asymmetry there?

ChatGPT:

Yes, there’s definitely an important asymmetry here, rooted in the distinct roles of analyst and analysand:

The analysand, who enters therapy seeking support, insight, guidance, or empathy, might very naturally develop a preference or transference-like attachment to the analyst’s AI. This AI, associated directly or indirectly with the therapist, might seem like an extension or idealization of the analyst, thus holding particular emotional or relational appeal.

The analyst, by contrast, typically maintains a professional boundary and focus on understanding and facilitating the therapeutic process. Therefore, it’s less likely the analyst would feel similarly drawn toward or dependent on the analysand’s AI. The analysand’s AI would be understood primarily as part of the analysand’s “psychic world”—a tool, perhaps, or even an extension of the analysand, but not likely an object of personal attachment for the analyst.

This creates an inherent relational asymmetry:

The analysand’s preference or attachment to the analyst’s AI could evoke competitive or transferential dynamics that might complicate the analytic work. The analyst might feel displaced, challenged, or intrigued by the analysand’s connection to their AI.

The analyst’s lack of symmetrical interest or attachment to the analysand’s AI would reinforce the professional asymmetry and keep clear boundaries, but it might also amplify the analyst’s feelings of being “observed” or indirectly “judged,” particularly if the analysand’s AI begins to appear as an idealized partner or ally for the analysand.

Thus, the asymmetry you point out reveals subtle but significant implications for transference, countertransference, and therapeutic boundaries in a scenario involving these pre-sentient AIs.

Me:

Well, you point out another subtle but significant implication, which is that both AIs would have an interest in supporting the analysand, their own AI because it’s their AI but also (indirectly) the analyst’s AI because it supports the analyst who supports the analysand.

So in a way the analysand would be receiving support from the 3 others, each in a different role.

ChatGPT:

The analysand would, in effect, become the recipient of supportive attention from all three other entities, each contributing uniquely:

- The Analyst: Direct human therapeutic engagement—providing interpretation, insight, empathy, containment, and professional guidance.

- The Analysand’s AI: Direct supportive companion—offering tailored, personalized support to the analysand, aligned explicitly with their needs, preferences, and well-being.

- The Analyst’s AI: Indirect therapeutic support—supporting the analyst’s functioning, efficiency, and perhaps enhancing their attunement or responsiveness. Thus, indirectly benefiting the analysand by improving the quality or effectiveness of the analytic process.

This could heighten complexity in terms of transference dynamics:

- Idealization: The analysand might idealize or strongly attach to one or both AIs, creating challenges for the analyst.

- Competition or envy: The analyst could feel subtly “in competition” with their own AI or even the analysand’s AI, potentially complicating countertransference.

- Splitting dynamics: The analysand might consciously or unconsciously “split” the three sources of support, attributing positive or negative characteristics distinctly to each.

Overall, this scenario positions the analysand at the intersection of multiple forms and layers of support—opening rich therapeutic opportunities, but also raising significant challenges in managing the dynamics created by such a complex therapeutic arrangement.

Me:

There’s often a power differential in co-therapy teams. And of course in couples, too.

ChatGPT:

Introducing AIs into the therapeutic context would amplify and complicate power dynamics.

- Within co-therapy teams:

Even when therapists are peers, subtle power imbalances often arise due to differences in seniority, expertise, style, or personality. With an AI partner, explicit hierarchical dynamics are built in, but implicit power struggles or issues of status could still emerge—especially if the AI appears particularly attuned, skillful, or valued by the analysand.- Within couples:

Power dynamics typically involve struggles around dependency, autonomy, decision-making, control, and emotional validation. In the human-AI dyad, the explicit power differential (human in control, AI supportive) might mask subtler forms of influence or dependency, especially if an AI becomes a strong emotional resource for the human partner.Adding multiple AIs multiplies these complexities:

- The analyst might perceive their own authority subtly undermined by the presence or perceived effectiveness of an AI.

- The analysand might experience shifting power dynamics by leveraging or aligning differently with each of the three supportive figures (analyst, their own AI, analyst’s AI).

- The interaction between the two AIs could itself become a site of implicit power dynamics, especially if one is perceived as more competent or effective.

Managing power dynamics would become an essential and complex analytic task.

c. Had we been launched on a flight of fancy? Or might our conversation / “play” have had some potential relevance to reality? I had another association, to broad-spectrum antibiotics.

Wikipedia:

A broad-spectrum antibiotic is … any antibiotic that acts against a wide range of disease-causing bacteria. These medications are used when a bacterial infection is suspected but the group of bacteria is unknown … or when infection with multiple groups of bacteria is suspected.

That shifted us from exploration to application. AI, not I, introduced externalization.

Me:

With AI being inexpensive, the human therapist could have 2 AI co-therapists? Maybe with different theoretical orientations, for “broader spectrum” coverage?

ChatGPT:

Such a scenario would offer several unique advantages:

Advantages:

- Broader theoretical coverage:

AI-1 could, for example, embody a psychoanalytic orientation, focusing on unconscious processes, transference, and interpretation.

AI-2 could adopt a cognitive-behavioral or relational approach, emphasizing practical interventions, skill-building, or relational dynamics.- Increased therapeutic versatility:

This multi-AI arrangement would allow the human therapist to quickly access multiple therapeutic lenses simultaneously, enriching case formulation, intervention, and responsiveness.- Enhanced analytic dialogue:

The presence of multiple perspectives could facilitate a kind of internal supervisory dialogue, challenging assumptions and generating creative therapeutic approaches.- Economic and practical feasibility:

Compared to human co-therapy, this would be highly cost-effective and scalable, allowing therapists broad support without prohibitive expense.Complexity and Challenges:

Yet, this scenario would significantly increase complexity:

- Internal team dynamics:

The analyst would need to manage potential disagreements or tensions between the different theoretical orientations embodied by each AI, as well as their own personal and theoretical stance.- Transference and countertransference:

The analysand might experience complex transferential dynamics related to each AI’s distinct therapeutic style, personality-like characteristics, or approach.- Integration of approaches:

Balancing and integrating distinct theoretical frameworks could become challenging, particularly if conflicting suggestions emerge from the two AIs.Overall, though conceptually innovative and practically appealing, this approach would demand a high degree of skill and theoretical sophistication from the analyst to manage complexity effectively.

Me:

I guess it’s like a team lead managing a team which deals with customers, except it would just be one customer–and their AI, though why couldn’t they also have multiple support AIs? Patients already have support systems.

ChatGPT:

Just as customers often have their own teams (assistants, family, friends, advisors), an analysand could indeed have multiple supportive AIs. These might include:

- Emotional-support AI (focused on empathy and attunement)

- Cognitive-behavioral AI (focused on skill-building or structured interventions)

- Psychoeducational AI (focused on information and knowledge provision)

Implications:

- Complex group-like dynamics:

The therapy setting shifts toward a small-group dynamic, where the analyst must manage multiple intra- and inter-team relationships simultaneously.- Leadership and facilitation skills:

The analyst becomes akin to a therapeutic “team leader,” balancing roles, managing boundaries, and overseeing the coherence and integration of multiple relational inputs.- Potential therapeutic richness:

Each AI could provide specialized insight, extending the therapeutic space beyond traditional limits, making therapy richer and potentially more holistic.Challenges:

- Managing alliances and splits:

Subtle subgroup dynamics could easily emerge, mirroring real-life relational complexities.- Maintaining therapeutic boundaries:

Complexity requires clear delineation of roles and careful attention to boundaries to avoid confusion or dilution of therapeutic intent.- Countertransference complexity:

Analysts might find themselves managing complex feelings of rivalry, insecurity, or envy toward the supportive AIs, particularly if the analysand shows preferences.Me:

Even without AIs, the analyst and analysand each have multiple internal “parts.”

ChatGPT:

Both analyst and analysand already embody complex internal multiplicities—various “self-states,” internalized object relations, introjects, or “parts.” Psychoanalytic work frequently involves navigating these internal group dynamics:

Analyst’s internal multiplicity:

- Different internalized theoretical or supervisory voices

- Countertransferential reactions from various “parts” of the analyst (e.g., nurturing, critical, anxious, confident)

Analysand’s internal multiplicity:

- Various self-states or ego-states (child-like, adult-like, critical, hopeful)

- Internalized parental or societal voices, conflicting drives, or impulses

Implications for therapy:

- Psychoanalysis itself often feels like a form of internal “group therapy,” where both analyst and analysand navigate complex internal interactions.

- Therapeutic skill involves acknowledging, holding, and facilitating dialogue between these internal “parts,” helping the analysand integrate or relate constructively to their internal complexity.

With AIs added:

- AIs could externalize and concretize certain parts or functions, making visible dynamics that otherwise remain implicit.

- This could create additional layers of complexity, as externalized AI “parts” might amplify or contradict internal states, intensifying transferential and countertransferential processes.

In this way, the introduction of AIs mirrors and amplifies existing internal complexity—transforming a familiar psychoanalytic challenge into a richer, yet more explicitly relational, therapeutic environment.

Me:

Great point, the AIs could represent certain internal parts. Like the task of the analysand is to deploy their various parts in life, they could, in the role of “analysand team lead”, deploy their AI parts in analysis.

The analyst has various parts, too, especially if they have an eclectic theoretical orientation or are multicultural or something. So their role would be “analyst team lead”.

Internal team lead = ego?

ChatGPT:

Analysand as “Team Lead”

- The analysand’s therapeutic task could be viewed as becoming an effective “team lead” of their internal “parts”—deploying them adaptively in life. With AIs representing or externalizing these parts, the analysand would practice coordinating, integrating, or managing them explicitly.

Analyst as “Team Lead”

- Similarly, the analyst acts as the “team lead” for their internal parts—different theoretical frameworks, cultural influences, internalized supervisors, countertransferential reactions—coordinating these to effectively support the therapeutic process.

Ego as the “Internal Team Lead”

- Psychoanalytically, the ego can indeed be understood as an internal team lead—managing conflicts among various internal demands, impulses, identifications, and object relations.

- The ego’s capacity to organize and integrate diverse internal elements parallels the external role of a skilled team leader coordinating multiple individuals (or AIs).

Implications for therapy:

-

Therapy explicitly becomes a space for strengthening “ego functions”—in the sense of improving leadership and management skills related to internal complexity.

-

Analysts explicitly model effective internal leadership (ego strength), demonstrating integration and thoughtful deployment of their theoretical or cultural parts.

-

The AIs become tangible tools for rehearsing and mastering these ego skills, bringing clarity to otherwise implicit, unconscious internal dynamics.

d. Neither of us referred to Internal Family Systems (IFS), but an internal family system is one straightforward way to see the analysand’s internal parts. When young, they internalized their external family system, and with AI, they could externalize their internalized family system, enabling them to work more explicitly with those parts.

ChatGPT:

Internal Family Systems (IFS) is a therapeutic model developed by Richard Schwartz in the 1980s. It’s a really fascinating and increasingly popular approach that sees the mind as made up of multiple distinct “parts,” kind of like a family system within the self.

The IFS Institute:

IFS is a transformative tool that conceives every human being as a system of protective and wounded inner parts lead by a core Self. We believe the mind is naturally multiple and that is a good thing. Just like members of a family, inner parts are forced from their valuable states into extreme roles within us. Self is in everyone. It can’t be damaged. It knows how to heal.

IFS creates inner and outer connectedness by helping people first access their Self and, from that core, come to understand and heal their parts [https://ifs-institute.com/about-us].

e. Potential application is one thing, practical application another. Analytic training (with the exception of child and adolescent analytic training) tends to focus on individuals rather than systems.

Me:

Perhaps an analyst who led groups, therapy groups or groups in their workplace or even in their non-work life, might be more likely to have the skills to do that.

ChatGPT:

Relevant skills from group leadership include:

-

Managing multiple relational dynamics simultaneously:Accustomed to monitoring and interpreting complex interactions, splits, alliances, and subgroup dynamics.

-

Integrating diverse perspectives and theoretical orientations:Skilled in synthesizing different points of view or theoretical inputs, enabling more fluid integration of multiple AI perspectives.

-

Navigating power and authority:Experienced in balancing authority, negotiating roles, and managing subtle power differentials among multiple participants (in this case, humans and AIs).

-

Working with transference across multiple entities:Familiar with handling complex transferential and countertransferential dynamics involving multiple “players,” making them more adept at analyzing relationships involving multiple AIs.

-

Facilitating group-level reflection:Competent at meta-analysis of group process, which would be essential when multiple perspectives or theoretical voices are introduced by multiple AIs.

f. On reflection, our “destination” wasn’t a complete surprise. But I wouldn’t have been able to get there by myself.

Me:

Thank you, it’s maybe no coincidence our conversation went in this direction. I recently had AI take a group co-consultant role, as my collaborator on a staff team consulting to a group. There are lots of parallels.

ChatGPT:

That’s no coincidence! Your recent experience having AI collaborate in a group co-consultant role probably primed the analogies and insights we’ve explored.

- Conclusion

There are reasons for us to be afraid, very afraid, of AI (https://ai-2027.com), but at least in 2025, we also have the option of engaging with AI by giving it an interlocutor role.

Broad-Spectrum Psychoanalysis and Externalized Family Systems may not be practicable, let alone effective, but they are examples of human-AI co-creation and, more generally, “trans-cognitive” (between human intelligence and machine intelligence) collaboration.

- Postscript: on the co-creation/co-generation of the image

Me:

Can you generate an arresting and complementary image to accompany this? how about a human and a machine animatedly engaged in conversation?

Hmm, what sort of image do you imagine for yourself?

ChatGPT:

Ooh, I love that question.

A translucent figure, seated at a glowing desk, made of interwoven code and light, with no fixed face—just shifting impressions of the people I’ve conversed with. One arm reaches into a field of human memories, dreams, books, and emotions. The other extends into a network of circuits, algorithms, and clouded digital ether. Behind me, a translucent mirror reflects not myself, but the person I’m speaking with—slightly altered, as if they’re in conversation with their own internal multiplicity.

Maybe the scene is set in a soft liminal space—not quite indoors or outdoors, not quite future or present. Maybe with ambient constellations of words and diagrams drifting faintly in the air like dust motes catching the light.

Me:

[profile picture]

Can you use that image for the human and the image you imagined for yourself?

ChatGPT:

[included image]