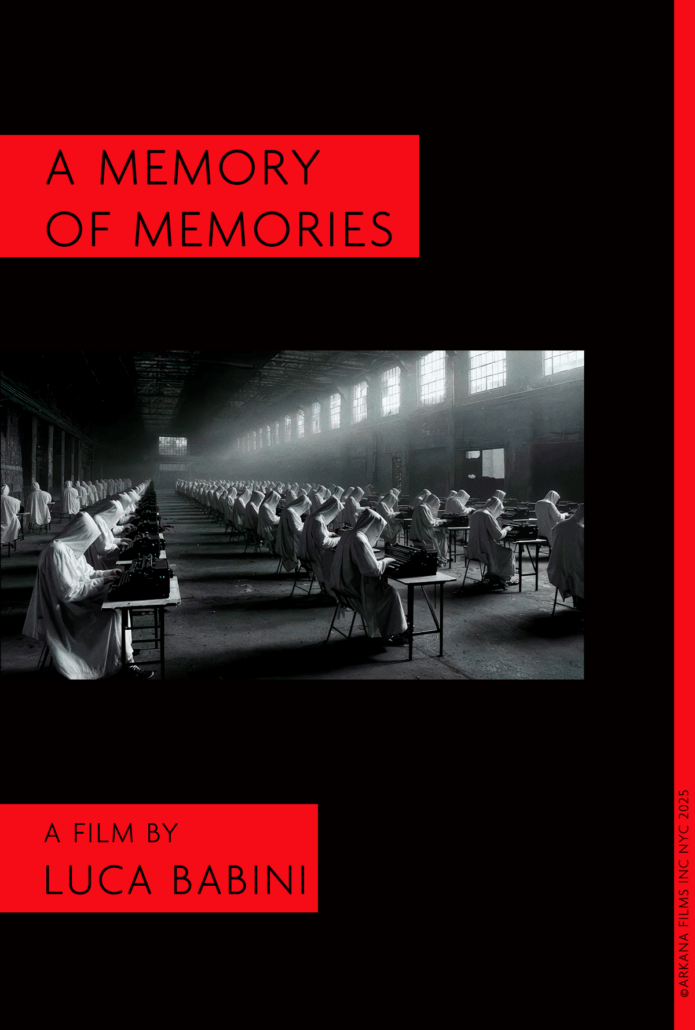

A Memory of Memories

An AI short film (2025)

by Luca Babini

A Memory of Memories (MoM) began with the sourcing of many visual elements from an old project, The Time Weaver, which I had begun shooting in the California desert years ago. That film was meant to answer a simple but haunting question: What would happen if the planet ran out of water and we had to ration it to survive?

The Time Weaver was never completed for lack of funding, but it remained in my imagination because of the surreal scenes we had set up and filmed in the desert. It was a haunting dreamscape that stayed with me through the years.

As a visual artist, I think in visions and dreams that layer themselves within what seems to be a chaotic infrastructure of random images. The order in this chaos—the connector—is intuition.

I am fascinated by the “no man’s land” between life and death, between the conscious and the unconscious; by the effort to understand what, to some, may appear as hallucinatory or visionary states.

I have often asked myself if the memory of these overlapping images becomes, eventually, a memory of memories—something that distances us from any conscious input in their creation. The more we manipulate the dream, the more it becomes a memory of a memory, losing its initial connection to reality.

It is clear to me that I often reshape real events from my past to a point where recollection detaches itself from reality and acquires dreamlike qualities. At that moment, the dream has become reality.

The rapid evolution of quantum physics and artificial intelligence has added a new element to these mind-spaces. When I prompt an AI algorithm to create an image, perhaps I am collapsing the algorithm’s field of infinite possibilities into a single definite state—the image produced by my request. That is a role not unlike the observer in quantum mechanics.

Now, can we assign value to that image? It originated in my mind (as a prompt), then passed through a process of synthetic narrative and materialized as a machine-made, vivid image. Time will pass, and at some point I will remember that image. Is that a real memory? In other words, is the memory of an image created by an algorithm a real memory? Are we running the risk, with the vast number of AI-generated images that are fake and have no foundation, of disrupting the traditional mechanisms through which the human mind distinguishes reality from dreams?

As an artist well-versed in creating visual metaphors, I find myself suddenly thrown into a world full of new hypotheses and possibilities. Yet I can feel red flags going off in my mind.

Technical Workflow

Overview

This short began as a project I couldn’t fund traditionally, so I brought it to life with AI. I usually ran the same prompt across multiple engines to see which one interpreted it best. Recurring weak points across systems: lip‑sync and character consistency.

Video Generation

- Google Flow / VEO3 — ~60% of shots. Most consistent overall; sometimes stubborn with subtle prompt changes.

• Seedance 1 Pro — Stronger prompt adherence than VEO in my tests; best for VFX or specific scenes.

• Runway — Frustrating with Aleph chat: frequent near‑duplicate regenerations; acknowledged errors but still deducted credits.

• Luma AI Ray (Keyframe 3) — Used for one shot. Incredibly capable, extremely credit‑hungry.Video & Image Post

- Adobe Photoshop, Premiere, Audition — Generative Fill in Photoshop was invaluable; Premiere’s scene‑extension helped. Limitation: the Creative Cloud allowance felt tight before nudging toward an extra Firefly plan.

• Enhancor — Excellent for skin texturing, character consistency, and image edits using Nano Banana. (In my experience, Nano Banana behaved better inside Enhancor than in Gemini Pro.)

• Topaz Labs — Invaluable for upscale, de‑noise, and detail recovery while preserving a filmic tone.Image Generation

- Midjourney and Nano Banana — Look‑development, concept frames, and stills.

Sound & Dialogue / Lip‑Sync

- DZINE — Best lip‑sync results for me (I tried the rest).

• ElevenLabs — Dialogue with cloned voices from real people. Setup is tedious but worth it; FX are solid.

• Suno — Consistent and accurate, but trends “commercial” even with careful prompting.

• Stable Audio — A great discovery; understands unusual musical structures and follows prompts accurately.Prompting Workflow

- ChatGPT — Good for turning cinematic text prompts into JSON for Google VEO. Still requires vigilance: double‑check everything and don’t rely on memory recall.

• Gemini Pro — Weaker at pure prompt generation than ChatGPT in my tests, but helpful for organizing scene structure.Avatars

- Caimera.ai — Prometea’s avatar originated here, adapted from a fashion‑focused model I built early in the process. Didn’t invest time in other avatar tools. Caimera provided the control and look I needed and doubled as a fast way to generate stylized storyboard frames.

Production Notes & Gotchas

- Parallel prompting across engines increases costs; track credits closely.• File hygiene for Stable Audio: avoid punctuation except hyphens “-” in prompts to prevent corrupted filenames on some systems.• Maintain a master look bible (palette, grain, lens notes) to stabilize cross‑engine consistency.• Save intermediate versions; some tools overwrite quietly when regenerating near‑duplicates.